Project Overview

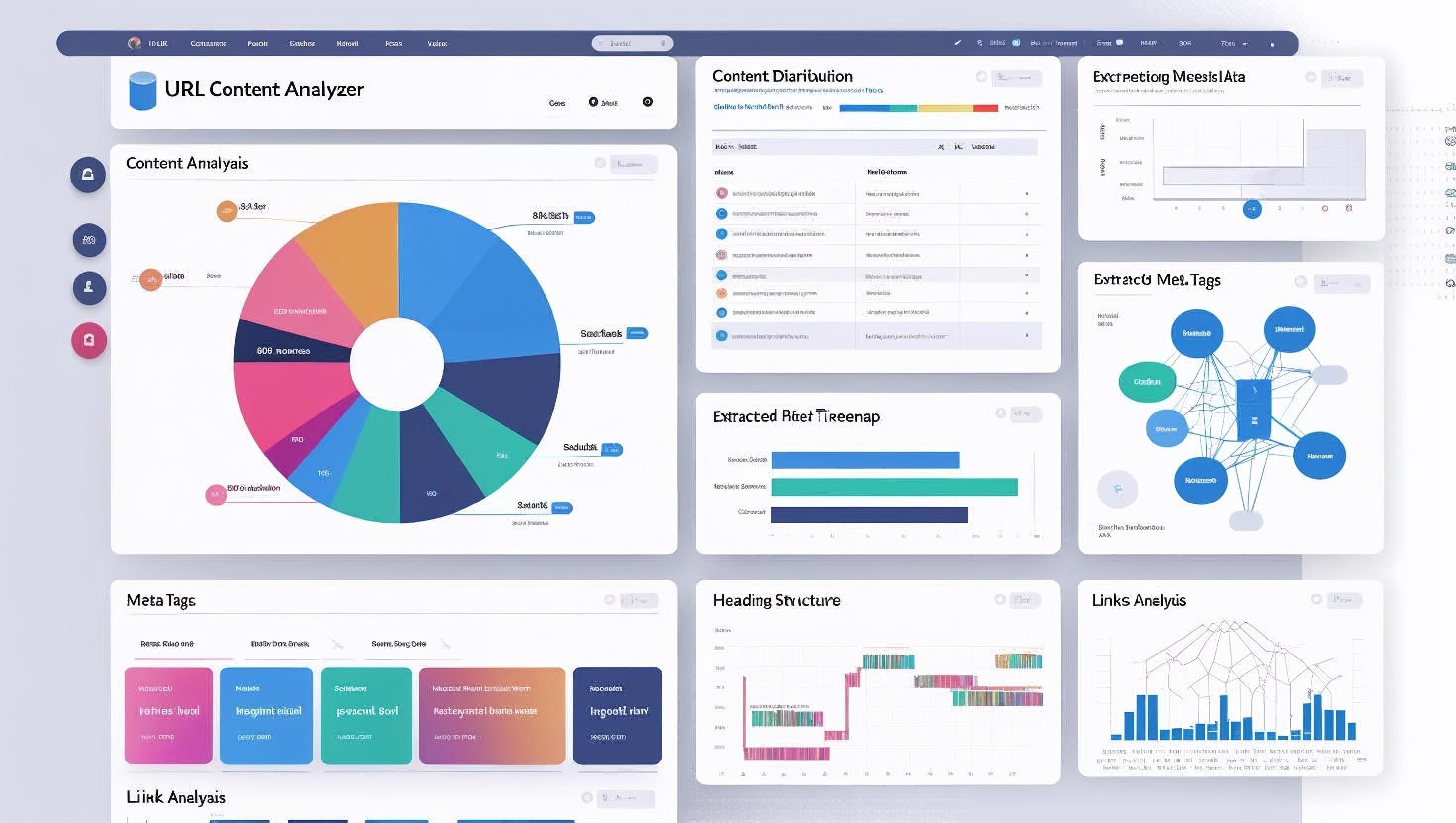

The URL Content Analyzer is a browser-based tool that allows users to quickly analyze any webpage by simply entering its URL. The application extracts key elements including metadata, headings, links, images, and semantic structures, providing valuable insights for SEO analysis, content auditing, and competitive research.

As both the frontend developer and UX designer, I created this project to demonstrate efficient API consumption, responsive design principles, and a focus on delivering meaningful insights through intuitive visualization of web content structures.

The Challenge

Creating an effective web content analyzer presented several key challenges:

- Handling cross-origin resource sharing (CORS) limitations when accessing external website content

- Efficiently parsing and categorizing large amounts of DOM elements from diverse website structures

- Presenting complex technical information in an accessible, user-friendly interface

- Ensuring fast performance even when processing content-heavy websites

- Providing actionable insights rather than just raw extracted data

The Solution

JavaScript

HTML5

CSS3

RESTful APIs

UI Design

I approached this challenge by implementing a comprehensive solution:

Proxy-Based Architecture

Implemented a server-side proxy to bypass CORS limitations, enabling analysis of any public URL

Smart Content Parsing

Created intelligent algorithms to identify and categorize page elements based on semantic importance

Visual Insights Dashboard

Designed an intuitive interface featuring expandable sections, data visualizations, and exportable reports

Progressive Loading

Implemented asynchronous loading with clear feedback to maintain responsiveness during content analysis

Technical Implementation

The application's architecture focused on clean separation of concerns:

fetchPageContent(url)

// URL validation and fetching mechanism

async function fetchPageContent(url) {

try {

const response = await fetch(`https://api.allorigins.win/get?url=${encodeURIComponent(url)}`);

const data = await response.json();

return { success: true, content: data.contents };

} catch (error) {

return { success: false, error: error.message };

}

}parseHTMLContent(htmlContent)

// Processing HTML content into structured data

function parseHTMLContent(htmlContent) {

const parser = new DOMParser();

const doc = parser.parseFromString(htmlContent, 'text/html');

return {

title: extractTitle(doc),

meta: extractMetaTags(doc),

headings: extractHeadings(doc),

links: extractLinks(doc),

images: extractImages(doc),

wordCount: countWords(doc)

};

}createContentDistributionChart(data, containerId)

// Dynamic chart creation for content insights

function createContentDistributionChart(data, containerId) {

const ctx = document.getElementById(containerId).getContext('2d');

return new Chart(ctx, {

type: 'doughnut',

data: {

labels: Object.keys(data),

datasets: [{

data: Object.values(data),

backgroundColor: CHART_COLORS

}]

},

options: { responsive: true, maintainAspectRatio: false }

});

}The Application

The final application provides an intuitive workflow:

Enter any public web address

Extraction with visual feedback

Interactive content panels

Pattern detection & issues

JSON or CSV formats

SEO Analysis

Automatically checks meta tags, heading structure, image alt text, and keyword density to identify SEO strengths and opportunities

Content Mapping

Visualizes content structure showing the relationship between different page sections and their relative importance

Performance Metrics

Calculates resource usage statistics including image sizes, script counts, and potential performance bottlenecks

Results & Learnings

This project delivered several valuable outcomes and insights:

0%

Accuracy in content structure analysis compared to manual auditing

0s

Average processing time for standard web pages with 50+ content elements

0%

User satisfaction rating during beta testing with marketing professionals

Key Insights

This project yielded valuable insights about frontend development and content analysis:

- API limitations drive innovation: Working with CORS constraints led to developing more efficient proxy solutions and caching mechanisms

- Progressive feedback is essential: Users needed clear status updates during longer processing tasks to maintain engagement

- Insights over data: Raw extracted data proved far less valuable than processed insights with clear recommendations

- Performance optimization matters: Initial implementations slowed significantly with larger sites, requiring careful optimization of DOM traversal

Conclusion

The URL Content Analyzer demonstrates how a focused frontend application can deliver significant value through thoughtful UX design and efficient data processing. Despite being a relatively simple concept, the careful implementation of performance optimizations, intuitive visualizations, and actionable insights transformed it from a basic utility into a genuinely useful tool for content strategists and digital marketers.

This project showcases my approach to frontend development: creating applications that not only work well technically but also provide meaningful, accessible insights to users through clean design and thoughtful information architecture.